监控环境基于docker和docker-compose搭建。

安装采集器

用于收集各种软件和服务器的性能指标。

Linux服务器

version: '3'

services:

reporter_node: # 服务器指标采集,用于监控远程主机

image: prom/node-exporter:v1.7.0

restart: always

container_name: reporter_node

user: root

healthcheck:

test: ["CMD", "nc", "-zv", "localhost", "9100"]

interval: 6s

timeout: 5s

retries: 10

ports:

- 9100:9100服务中心Zookeeper

Zookeeper已经提供性能指标接口无需额外安装软件。

消息中间件Kafka

⚠️ 提示

启动脚本中的鉴权信息必须是实际监控环境的保持一致。

version: '3'

services:

reporter_kafka: #【应用软件】

# 采集Kafka指标,必须和Kafka在同一个局域网内

image: danielqsj/kafka-exporter:v1.7.0

restart: always

container_name: reporter_kafka

command:

- '--kafka.server=localhost:29092' #kafka服务节点1访问地址

# - '--kafka.server=${SERVER_HOST_DOMAIN}:9092' #kafka服务节点2访问地址

- '--sasl.enabled' #kafka鉴权4要素:开启SASL

- '--sasl.mechanism=scram-sha512' #kafka鉴权4要素:鉴权协议

- '--sasl.username=thingskit' #kafka鉴权4要素:用户名

- '--sasl.password=thingskit' #kafka鉴权4要素:密码

# volumes:

# - /_makeFile/_certs:/bitnami/kafka/config/certs:ro # 自签名(根)证书密钥

ports:

- 9098:9308

env_file:

# - /_makeFile/_common.env

- /_makeFile/miscroservice.env

缓存Redis

⚠️ 提示

启动脚本中的鉴权信息必须是实际监控环境的保持一致。

version: '3'

services:

reporter_redis: #【应用软件】prometheus、grafana(763)

image: oliver006/redis_exporter:v1.55.0

restart: always

container_name: reporter_redis

ports:

- 9121:9121

environment:

- REDIS_EXPORTER_NAMESPACE=redis

- REDIS_ADDR=redis://localhost:6379

- REDIS_PASSWORD=litangyuan

关系数据库Postgres

⚠️ 提示

启动脚本中的鉴权信息必须是实际监控环境的保持一致。

version: '3'

services:

reporter_pgsql: #【应用软件】

# Pgsql数据库监控 https://github.com/prometheus-community/postgres_exporter

image: prometheuscommunity/postgres-exporter:v0.15.0

restart: always

container_name: reporter_pgsql

# user: root

healthcheck:

test: ["CMD", "nc", "-zv", "localhost", "9187"]

interval: 6s

timeout: 5s

retries: 10

ports:

- 9187:9187

environment:

- DATA_SOURCE_URI=localhost:5432/thingskit?sslmode=disable

- DATA_SOURCE_USER=postgres

- DATA_SOURCE_PASS=thingskit负载均衡Haproxy

⚠️ 提示

启动脚本中的鉴权信息必须是实际监控环境的保持一致。

version: '3'

services:

reporter_haproxy: #【应用软件】prometheus、grafana(12708)

# Mysql数据库监控

image: quay.io/prometheus/haproxy-exporter:latest

restart: always

container_name: reporter_haproxy

healthcheck:

test: ["CMD", "nc", "-zv", "localhost", "9101"]

interval: 6s

timeout: 5s

retries: 10

ports:

- 9101:9101

environment:

- SCRAPE_URI=http://172.30.69.207:9999/status

平台服务组件

常见平台服务组件,例如:核心服务、规则引擎、设备接入(MQTT、TCP/UDP、GBT28181)服务等。

⚠️ 提示

需要为服务组件添加环境变量。METRICS_ENDPOINTS_EXPOSE=prometheus。

安装Prometheus

是一款基于时序数据库的开源监控告警系统。

version: '3'

services:

prometheus:

image: prom/prometheus:v2.42.0

volumes:

- ./conf/prometheus/:/etc/prometheus/

command:

- '--config.file=/etc/prometheus/prometheus.yml'

ports:

- 9090:9090

restart: always

container_name: prometheus

user: root

⚠️ 提示

配置文件中job_name的值需要修改。部分修改后会引起Grafana看板无法使用。

⚠️ 提示

配置文件中targets的值结合实际情况调整。

global:

scrape_interval: 15s # By default, scrape targets every 15 seconds.

evaluation_interval: 15s # By default, scrape targets every 15 seconds.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: 'thingskit'

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets: ['localhost:9090']

- job_name: 'cluster_nodes' #服务节点指标采集

static_configs:

- targets: ['localhost:9100']

- job_name: 'cluster_kafka'

# metrics_path: /actuator/prometheus

static_configs:

- targets: ['localhost:9098']

- job_name: 'cluster_redis'

# metrics_path: /actuator/prometheus

static_configs:

- targets: ['localhost:9121']

- job_name: 'cluster_zookeeper'

# metrics_path: /metrics

static_configs:

- targets: ['localhost:7070','localhost:7070']

- job_name: 'cluster_pgsql'

# metrics_path: /actuator/prometheus

static_configs:

- targets: ['localhost:9187']

- job_name: 'cassandra_cluster'

# metrics_path: /actuator/prometheus

static_configs:

- targets: ['localhost:7199']

- job_name: 'cluster_nginx'

# metrics_path: /metrics

static_configs:

- targets: ['localhost:9113']

- job_name: 'cluster_haproxy'

# metrics_path: /metrics

static_configs:

- targets: ['localhost:9101']

- job_name: 'tb-core1'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-core1:8080' ]

- job_name: 'tb-core2'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-core2:8080' ]

- job_name: 'tb-core3'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-core2:8080' ]

- job_name: 'tb-rule-engine1'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-rule-engine1:8080' ]

- job_name: 'tb-rule-engine2'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-rule-engine2:8080' ]

- job_name: 'tb-rule-engine3'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-rule-engine2:8080' ]

- job_name: 'tb-mqtt-transport1'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-mqtt-transport1:8081' ]

- job_name: 'tb-mqtt-transport2'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-mqtt-transport2:8081' ]

- job_name: 'tb-mqtt-transport3'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-mqtt-transport2:8081' ]

- job_name: 'tb-http-transport1'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-http-transport1:8081' ]

- job_name: 'tb-http-transport2'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-http-transport2:8081' ]

- job_name: 'tb-http-transport3'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-http-transport2:8081' ]

- job_name: 'tb-coap-transport'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-coap-transport:8081' ]

- job_name: 'tb-lwm2m-transport'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-lwm2m-transport:8081' ]

- job_name: 'tb-snmp-transport'

metrics_path: /actuator/prometheus

static_configs:

- targets: [ 'tb-snmp-transport:8081' ]

安装grafana

version: '3'

services:

grafana:

image: grafana/grafana

user: "472"

depends_on:

- prometheus

ports:

- 3000:3000

volumes:

- ./conf/grafana/:/etc/grafana/provisioning/

env_file:

- ./conf/grafana/monitor.env

restart: always

container_name: grafana

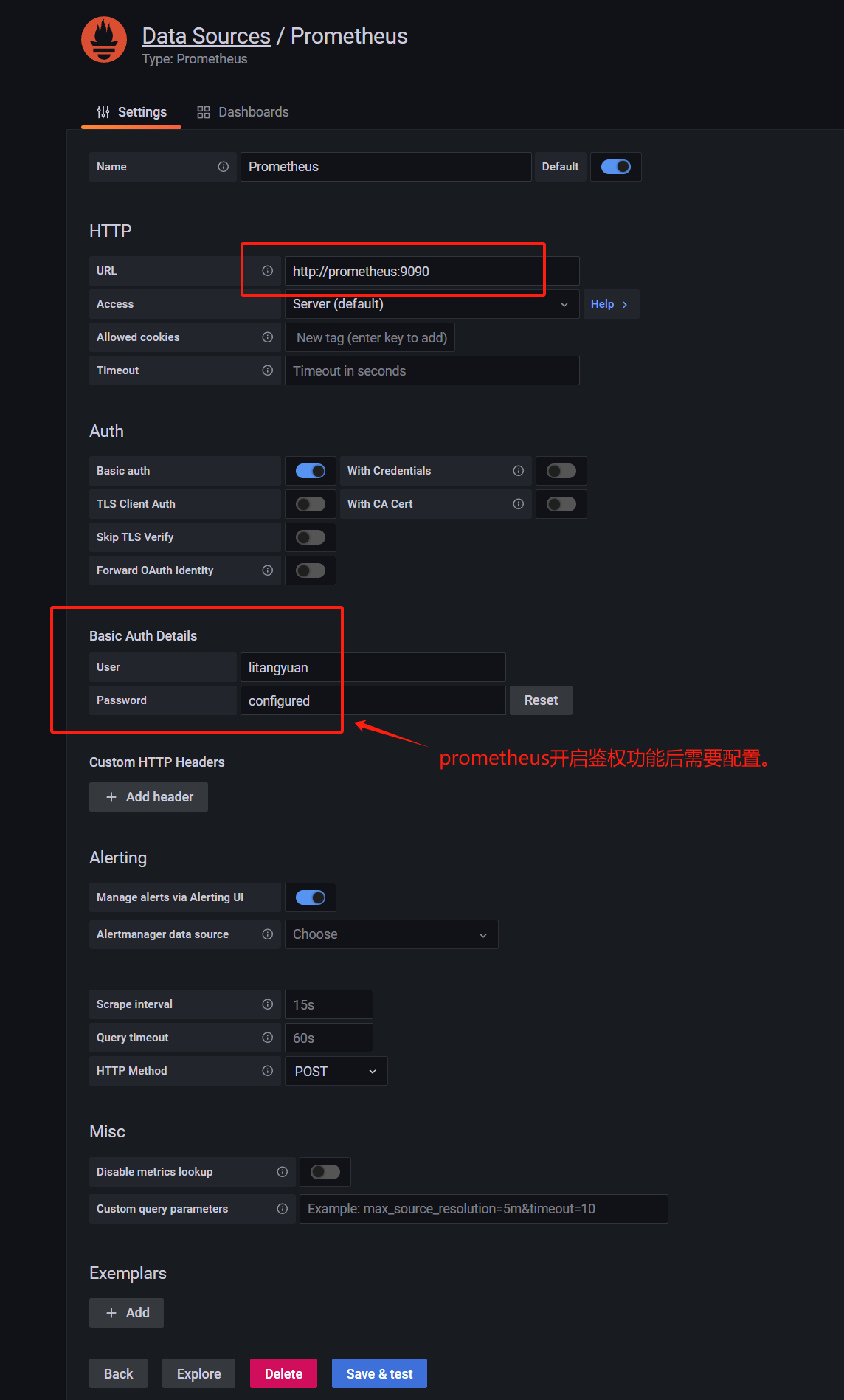

配置数据源

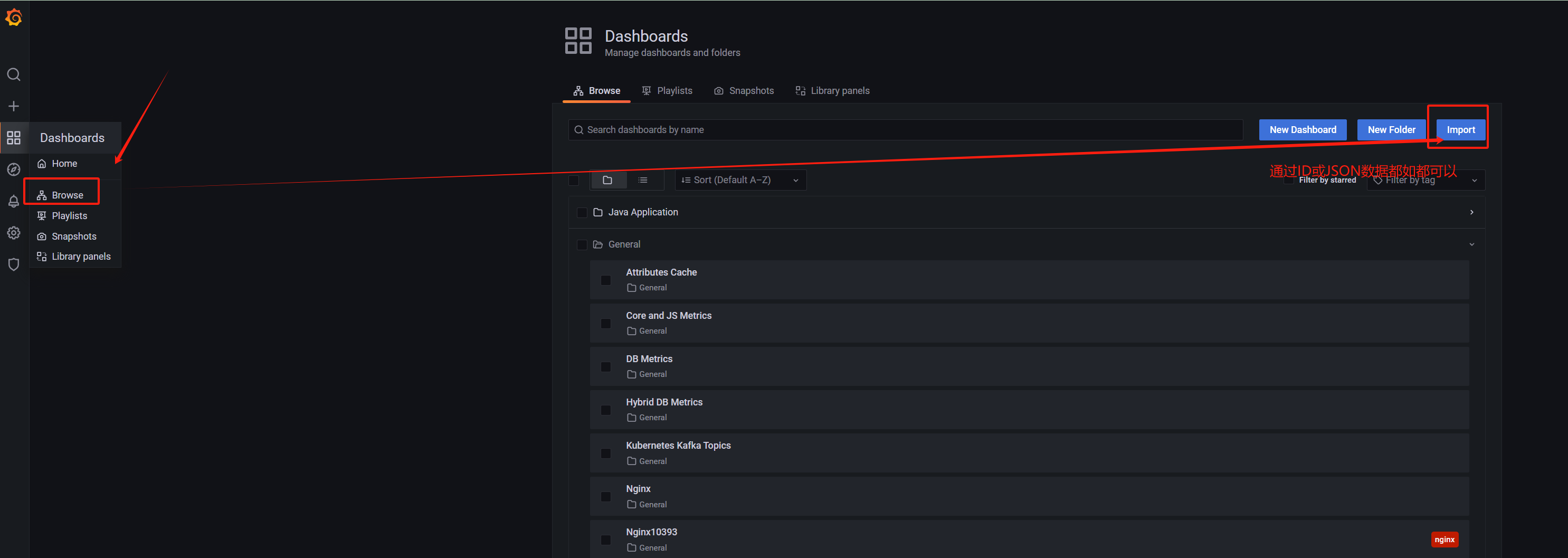

导入看板

看板市场

Linux服务器

模板ID:1860、16522

服务中心Zookeeper

模板ID:10465

消息中间件Kafka

模板ID:12460、10122

缓存Redis

模板ID:763、11835

关系数据库Postgres

模板ID:9628

负载均衡Haproxy

模板ID:2428、12693

平台服务组件

模板ID:4701、11378、11955、14430

除了看板市场的模板,ThingsBaord也提供有基于服务的看板。